API Firewall

API Firewall is an API-native application micro-firewall that acts as the security gateway to your enterprise architecture, and is the single point of entry and exit for all API calls. It is the executor of API Protection: API Protection generates a protection configuration based on the OpenAPI (formerly Swagger) definition of your API, and API Firewall runs this configuration when it is deployed. Each API Firewall instance is based on the same base image, but tailored for the API in question based on the protection configuration, and it provides a virtual host for that particular API.

Both OpenAPI Specification v2 and v3.0 are supported.

API Firewall runtime is fully optimized so that you can deploy and run it on any container orchestrator, such as Docker, Kubernetes, or Amazon ECS. Minimal latency and footprint mean that you can deploy API Firewall against hundreds of API endpoints with very little impact. API Firewall protects the traffic in both North-South and East-West direction.

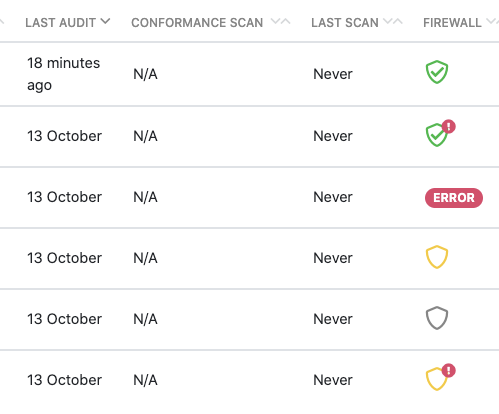

You can quickly check if your APIs have an API Firewall instance protecting them and when the protection configuration was last updated directly in the API collection. You can hover over the icons for a summary, or click on the status icon for more details.

Automatic contract enforcement in API Firewall

API Firewall means that you do not have to write separate policies to protect your API, or have AI trying to guess which traffic is valid and which is not. If your OpenAPI definition is getting a good score in API Security Audit, it means that you have already done the work needed to protect your API when you were developing it. The protection also evolves alongside your API.

API Protection creates an allowlist of the valid operations and input data based on the API contract, and API Firewall enforces this configuration to all transactions, incoming requests as well as outgoing responses. Transactions containing things not described in the API definition are automatically blocked:

- Messages where the input or output data does not conform to the JSON schema

- Undocumented methods (

POST,PUT,PATCH...) - Undocumented error codes

- Undocumented schemas

- Undocumented query or path parameters

API Firewall automatically enforces the API contract spelled out in your API definition. It filters out unwanted requests, only letting through the requests that should be allowed based on the OpenAPI definition of the API it protects. API Firewall also blocks any responses from the API that have not been declared or that do not match the API definition.

For more details on the validation that API Firewall performs, see How API Firewall validates API traffic.

Deactivating automatic contract enforcement

Automatic contract enforcement through allowlist is the protection you get for your API out of the box. By default, the allowlist is always on in API Firewall, and it blocks all API requests that do not conform to the OpenAPI definition of the protected API. However, in some cases you might want to switch the automatic contract enforcement off, either for the whole API or part of it.

Deactivating automatic contract enforcement should always be done on a case-by-case basis after a careful consideration. Deactivating it in the wrong place could have serious consequences to the security of your API.

You can use the x-42c-deactivate-allowlist vendor extension to the OpenAPI Specification (OAS) to switch off the automatic contract enforcement. You can also combine the deactivation with the protections for directional allowlist (only active for requests or responses).

For more details, see Deactivate automatic contract enforcement in API Firewall.

Additional protections

API Protection also lets you provide instructions to API Firewall by adding the required security measures as protections directly in the OpenAPI definition of your API.

When you create a protection configuration for API Firewall to protect your API with, API Protection creates the configuration based on the OpenAPI definition of the API and enriches it with the security information from the protections. API Protection builds the sequences of actions that the firewall will execute on incoming requests and outgoing responses based on the protections you have applied to your API. This is the configuration that the API Firewall instance loads when it starts.

API Firewall blocking level

By default, active API Firewall instances protecting an API block any transactions that are in violation of the API contract. However, if needed, you can control the blocking level in API Protection and choose what exactly API Firewall blocks.

Changing the blocking level in the API Firewall instance also decreases the level of protection it gives to your API and can leave your API unprotected! The instance continues to execute security policies normally but does not block any transactions, it only reports what would have been blocked. Proceed with caution, and only change the blocking level when absolutely necessary.

Changing the blocking level of an API Firewall instance may help, for example:

- Discover any potential issues in introducing API Firewall in the line of API traffic: are existing users blocked and if yes, why

- Detect false positives, if any

- Troubleshoot detected problems

For more details, see Set API Firewall blocking level.

Custom blocking mode

Sometimes, securing your APIs already in production can become problematic if the API was designed and implemented before API security was really considered. Although the automatic allowlist based directly on the OpenAPI definition of the API is powerful, it does mean that the API must be fully documented and the implementation must conform to that documentation.

While this should be the goal when designing your APIs, in reality this might not yet be the case with your older existing APIs, and with large APIs, reworking the API to get everything in order might push actually starting to protect the API too far into the future. On the other hand, deploying API Firewall to protect them using the normal blocking levels could mean that parts of the API might get blocked because they are not included on the allowlist, which of course is not an option with live APIs.

This is where the custom blocking mode (level 6) can help. Custom blocking mode adapts the behavior of API Firewall to your needs and allows you to more granularly configure how to handle unknown requests that are not described in the OpenAPI definition and customize the level of firewall protection on the known API endpoints. This lets you iterate on your API ad progressively improve its API definition while ensuring that the endpoints that are ready are secured. You can even define additional protections like rate limiting for crucial endpoints that need extra security (such as /login) while customizing the handling of the other endpoints as needed.

Custom blocking mode is only available for API Firewall images v1.1.1 or later.

API Firewall logs

As part of their operation, API Firewall instances produce three types of logs:

- Transaction logs

- Error logs

- Access logs

By default, API Firewall relays transaction logs back to 42Crunch Platform. System logs on access and errors from the API Firewall instance itself are located under /opt/guardian/logs in the file system of the API Firewall container.

Transaction logs

Transaction logs (api-<API UUID>.transaction.log) are the logs that API Firewall uploads to 42Crunch Platform by default. They provide detailed information on each API transaction that the firewall instance protecting the API blocked, such as:

- The method and path that was called

- Where the call originated

- What was the duration

- Why the call got blocked

For security reasons, when API Firewall blocks a request, the returned response contains just the UUID of the transaction and a minimal description of the issue. This way, the response does not reveal any information on the API, the backend, or implementation details that could be used to craft attacks against them. In 42Crunch Platform, you can then search the transactions logs for the particular UUID to retrieve the detailed trace of the blocked transaction.

Unknown transactions, like misdirected requests and sometimes attacks, are written in api-unknown.transaction.log. Any transaction that the API Firewall instance cannot map to the API that it is protecting is classified as an unknown transaction. For example, API Firewall might not be able to match a requested host (hostname:port) or path in the incoming HTTP request with the OpenAPI definition of the protected API. In this case, the request is logged as an unknown request.

Depending on the nature of your API, transaction logs could include personally identifiable information that falls under, for example, GDPR regulations. To facilitate the requirements from these regulations, such as the right to forget, it is possible to delete transaction logs from 42Crunch Platform. See Delete transaction logs.

Error logs

API Firewall produces two types of error logs:

error.log: Errors that occurred when the API Firewall instance was starting up (initialization errors)vh-<virtual host UUID>.error.log: Errors that occurred during virtual host runtime (runtime errors)

You can control the level of detail that gets written in the error logs with the following environment variable:

ERROR_LOG_LEVEL: Level of detail inerror.logLOG_LEVEL: Level of detail invh-<UUID>.error.log

API Firewall supports the standard Apache log levels. We recommend using the values warn, info, or error, depending on the granularity you need. The values

debug, trace1,

trace5,

and trace7 should not be used in production, but may be used when troubleshooting issues. For more details, see API Firewall variables.

Access logs

Access logs (vh-<virtual host UUID>.access.log) are the legacy transaction log that you are often required to enable for legal reasons. The access logs are mostly comprised of one line per request or transaction and include things like, for example, Common Log Format.

Log destination

Different logs have different destinations where they are published by default:

- Transaction logs go to 42Crunch Platform so that you can monitor the real-time traffic in the dashboards and Trace Explorer.

- System logs on access and errors from the API Firewall instance itself are located under

/opt/guardian/logsin the file system of the API Firewall container.

However, you can use the API-specific variable LOG_DESTINATION to change where transaction logs and error logs are published. The variable can take the following values:

| Value | Impact |

|---|---|

PLATFORM

|

Transaction logs are published to 42Crunch Platform. Error logs and access logs are written to the file system of the API Firewall container. This is the default value. |

FILES

|

All transaction, error, and access logs are written to a directory that you mount to your deployment as a volume for |

STDOUT

|

All transaction, error, and access logs are written as standard output (STDOUT) to console. |

FILES+STDOUT

|

All transaction, error, and access logs are written to a directory that you mount to your deployment as a volume for |

PLATFORM+STDOUT

|

Transaction logs are published to 42Crunch Platform and written as standard output to console. Error logs and access logs are written to the file system of the API Firewall container and as standard output to console. |

Log destination PLATFORM (alone or with STDOUT) is not suitable for firewall instances running in production environment due to the volume of logs produced. We recommend setting the log destination to FILES or STDOUT (or both) in production or for performance testing.

Using the default size for the container file system is fine for testing, or when you can be sure that the logs volume stays low. However, the volume of logs can rapidly increase, especially in production, so if you want to use PLATFORM or FILES (alone or with STDOUT), you must plan for this growth to avoid running out of disk space in your API Firewall container. Either reserve enough disk space, or mount an external disk as a volume.

If you use only STDOUT, then all logs are pushed out of the container, and container disk space is not a concern.

Depending on the nature of your API, transaction logs could include personally identifiable information that falls under, for example, GDPR regulations. To facilitate the requirements from these regulations, such as the right to forget, it is possible to delete transaction logs from 42Crunch Platform. See Delete transaction logs.

For more details, see Switch log destination for API Firewall logs.

If you want to write your own plugins that are fed by STDOUT, you can find the break down of the log syntax in API Firewall log line format for standard output.

API Firewall health check

API Firewall instances provide a dedicated endpoint /hc for health check calls that, for example, load balancers can use to check that the instance is up and running.

By default, the health check calls are over HTTP and use the port 8880, but you can also configure health check calls to use HTTPS connection, in which case they use the port 8843. The API Firewall container (or AWS task) must expose the used port, while the load balancer must not expose it. Both ports are reserved for the health check, so API consumers cannot call them.

API Firewall always responds with HTTP 200 OK to indicate all is well. Health check calls do not generate any logs.

For more details how to set up the health check for different systems, see Configure health check for API Firewall.

What is...

API Firewall deployment architecture

Kubernetes Injector for API Firewall

Protections and security extensions

How to...

Deploy API Firewall with Kubernetes Injector

Manage API Firewall configuration

Deactivate automatic contract enforcement in API Firewall

Monitor APIs and API Firewall instances

Learn more...

How API Firewall validates API traffic

x-42c extensions for API Protection and API Firewall